Study of Language Models: Evolution & Limitations

DOI:

https://doi.org/10.54060/JMSS/002.01.006Keywords:

Language Models, Rule-based, Statistical-based, RNN, LSTM, Attention, TransformerAbstract

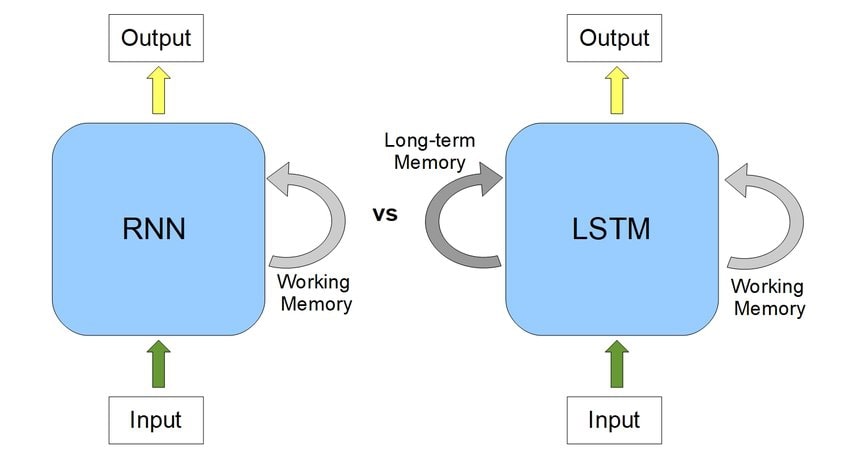

We have come far from the days when rule-based language models used to be the predomi-nant thing in the market. Machine Learning came into play and changed the Language Model industry. In this paper, we will look at how RNN did a much better task for generating output based on its previous results and then how LSTM fulfilled the memory requirement for RNN. Also, we will take a look at how Transformer is much better than RNN combined with LSTM, which is the state-of-the-art language model on which the two best natural processing models like BERT and GPT3.

Downloads

References

M. Johnson, “How the statistical revolution changes (computational) linguistics,” in Proceedings of the EACL 2009 Workshop on the Interaction between Linguistics and Computational Linguistics Virtuous, Vicious or Vacuous? - ILCL ’09, 2009.

S. Kumar et al., “Novel method for safeguarding personal health record in cloud connection using deep learning models,” Comput. Intell. Neurosci., vol. 2022, no. 3564436, pp. 1-14, 2022.

M. Trabelsi, P. Kakosimos and H. Komurcugil, "Mitigation of grid voltage disturbances using quasi-Z-source based dynamic voltage restorer," 2018 IEEE 12th International Conference on Compatibility, Power Electronics and Power Engineering (CPE-POWERENG 2018), 2018, pp. 1-6, doi: 10.1109/CPE.2018.8372574.

S. Kumar, P. K. Srivastava, G. K. Srivastava, P. Singhal, D. Singh, and D. Goyal, “Chaos based image encryption security in cloud computing,” J. Discrete Math. Sci. Cryptogr., pp. 1–11, 2022.

R. Jozefowicz, O. Vinyals, M. Schuster, N. Shazeer, and Y. Wu, “Exploring the limits of Language Modeling,” arXiv [cs.CL], 2016.

S. Kumar et al., “Protecting location privacy in cloud services,” J. Discrete Math. Sci. Cryptogr., pp. 1–10, 2022.

D. K. Choe and E. Charniak, “Parsing as language modeling,” in Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, 2016.

Iop.org. [Online]. Available: https://iopscience.iop.org/article/10.1149/10701.15533ecst/meta. [Accessed: 23-Jul-2022].